Research

Augmented Reality is “the integration of digital information with the user’s environment in real time.” (Gillis, 1999). Unlike Virtual Reality which provides a fully artificial environment, AR allows users to experience a real-world environment with generated perceptual information overlaid on top of it. AR delivers visual elements, sound, or other sensory information mainly using a smartphone or a similar device unlike a headset. The overlaid object can either be added into the environment or it masks part of the original environment. The aim of this project is to become familiar with producing AR content and to be able to introduce the audience into a mixed reality through their smartphones.

There is no doubt that us as humans connect with AR unintentionally most days, especially in the current generation. A famous example was a popular game introduced in 2016 called ‘Pokémon Go’. The notion of the game perfectly describes the workings of AR: “To create a game that would not only use digital technology but also employ physical involvement.” (studiousguy.com, 2023). This means that the user can walk around using their own smartphone camera and catching Pokémon as they do. With the help of augmented reality, the characters and overall experience appear authentic. A more complex and niche example is how AR can be used in profession’s such as Neurosurgery. With upcoming technology, AR serves to be the perfect assisting partner which can highlight blocked and damaged nerves digitally. This will help avoid possibility of human error and overall allow precision in a delicate vocation.

AR has many different considerations when thinking about sensor technology such as interpreting depth and space, lighting conditions, and object/symbol recognition. Advanced examples of AR will allow each of these to be incorporated to ensure a positive user experience. When thinking about the situation, it is essential that the producer picks the right type of augmented reality technology that best depicts their experience. Marker-based AR is the most common which uses patterns such as QR codes as a trigger for the object. You can also create location-based AR which requires the user’s GPS or compass to allow for virtual objects to appear in a specific location. In the following tasks, I will be focusing on the software’s of Unity WebGL and Zapworks and using Marker-Based AR with QR codes. Zapworks uses no code and empowers designers to create immersive experiences no matter their skill set or objectives.

Methodology

Setting up the Unity scene

In the first task, I will be experimenting and learning how to create AR experiences that use image trackers and WEBGL to produce online hosted AR content. This first part of software I am using is Unity’s WebGL which allows users to access AR content through a web browser. I will then upload my AR designs to Zapworks which provides webhosting for augmented reality content whilst being stored on a cloud rather than locally.

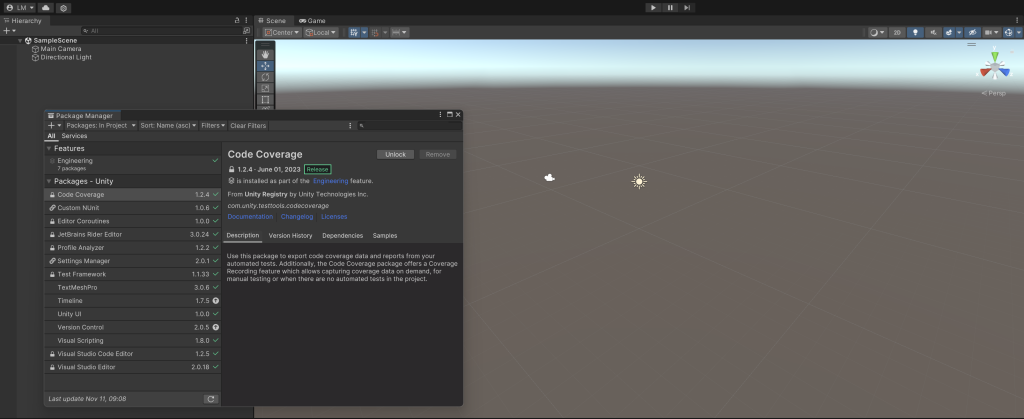

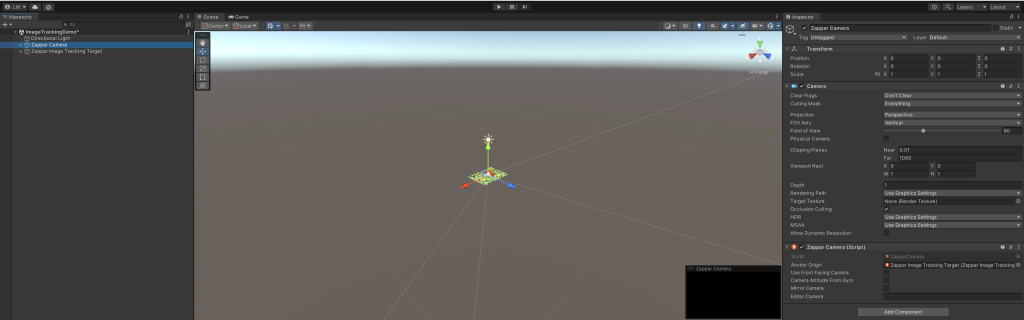

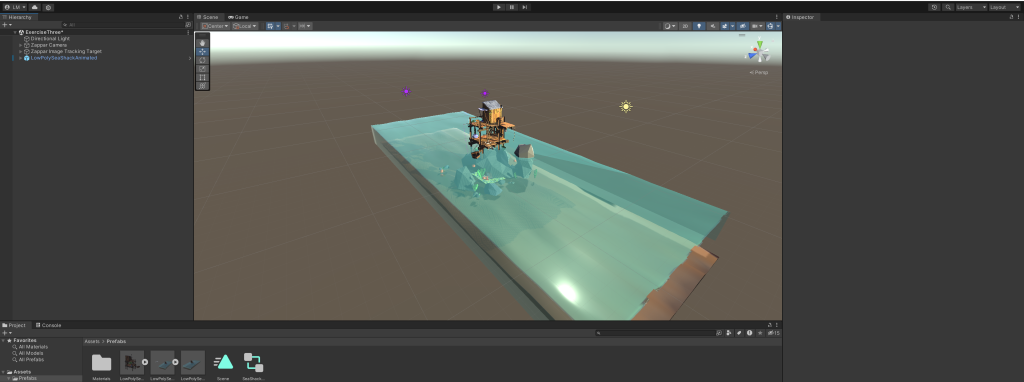

To begin the tutorial, I downloaded the zip file assets and launched the Unity Hub. It was then a case of switching the platform on Unity to WebGL to ensure that the transition onto Zapworks will be seamless. After I have installed the Zappar Universal AR SDK software development kit, I then needed to set up the scene for image tracking using the Zappar camera’s. Once the set-up is complete, it’s time to add the custom content required.

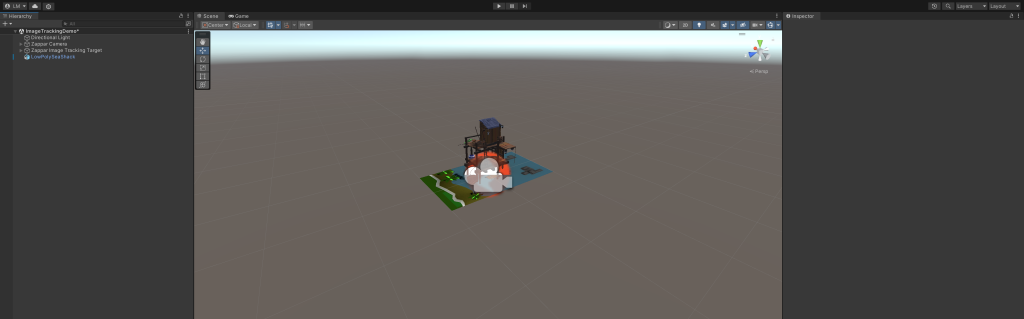

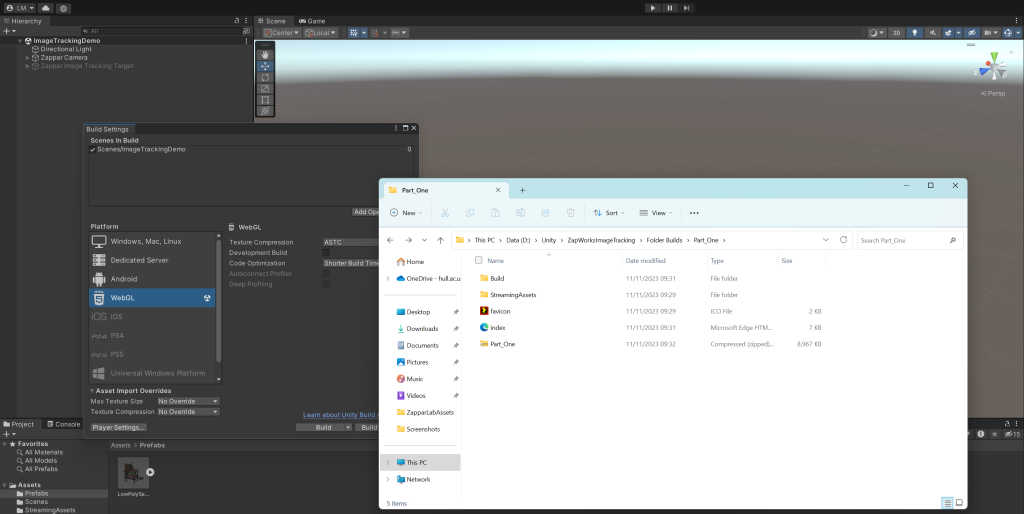

I am now setting up the image to be trained for tracking using Zapper tools and importing the assets. In the image trainer, I have imported the shack file so that the scene has the image on the preview target. After the image is imported, I can bring in the 3D asset and position it within the image. I then need to set up with three-dimensional model up to load with the image tracker so that the program will activate the game object whenever the camera detects the image tracking target. The final stage is building the scene in Unity to set up the file structure to import into Zapworks. Once the folder has finished the build process, the source files are transformed into a zip so that it loads correctly within the Zapworks servers.

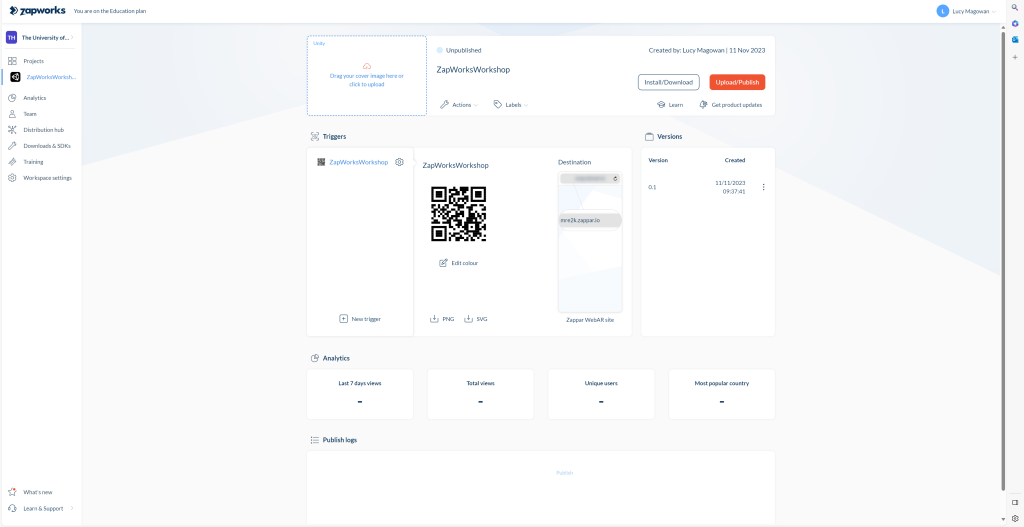

Uploading and Publishing to ZapWorks

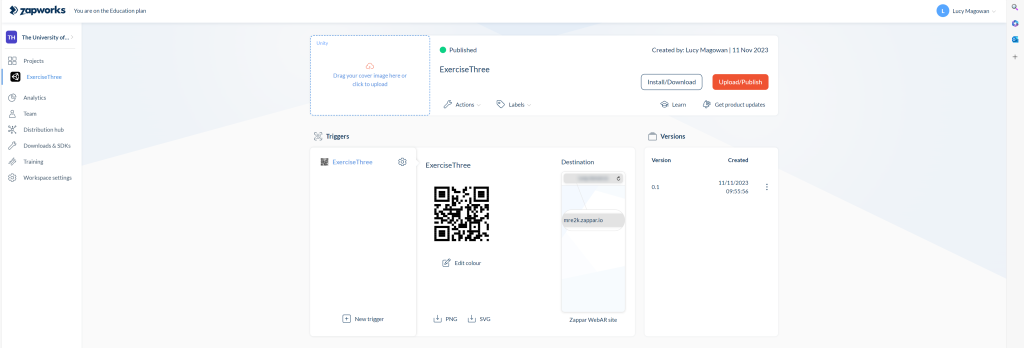

In the next part of the learning process, I am going to publish the ZappAR creation to the web so that any camera can scan a QR code and bring the project to life. Opening a Universal AR and selecting the Unity software development kit I can align my files with the selections in Zapworks. I then changed the project title to match the trigger title and imported my zip file to be published. Now anyone who scans the QR code on screen can see the 3D asset appear and they can interact with it in their environment.

Adding Animated Assets

When producing my own AR project, I need to ensure I choose a tracking image that follows current guidelines to enhance the experience for the user. I will avoid using images containing large blank spaces as it will not register as complex graphical data; I will further avoid using repetitive patterns as this may interfere with tracking performance. Zapworks’ technology views content in grayscale therefore to improve tracking performance, I may consider using grayscales in my images to view the contrasts.

Future Projects

When producing my own AR project, I need to ensure I choose a tracking image that follows current guidelines to enhance the experience for the user. I will avoid using images containing large blank spaces as it will not register as complex graphical data; I will further avoid using repetitive patterns as this may interfere with tracking performance. Zapworks’ technology views content in grayscale therefore to improve tracking performance, I may consider using grayscales in my images to view the contrasts. With these best practices in mind, it was time to create my own animated, interactive scene within Unity WebGL and Zapworks.

To begin, I had to think of what AR example I wanted to create. I thought about what would grab the user’s attention the most and what can also be made interactive to immerse the audience more in my Augmented Reality experience. I decided to go with a scene that incorporates a page from a children’s book. I used an example page that uses dinosaurs and talks about how to pronounce different species. This background scene included trees, land, and obviously images dinosaurs. My inspiration came from a reading app that embraces AR and allows children to become interactive when they are reading books.

I sourced my 3D asset content from an online free library which I have referenced below, and I have edited my book image to ensure best practice by changing the image ratio before using it with the Zapworks image trainer. Following the steps in the previous tutorial, I added all the assets in the Unity scene and altered the settings accordingly. I then went on to add the ZappAR content onto the web through Zapworks so that it can be accessed by anyone using the QR Code. This ensures that my AR work is easily accessible towards its intended audience and anyone that it interested in the interactive, immersive environment. The final product was extremely successful and I now feel confident in producing Augmented Reality content in any future scenario.

References

Aircards, 2020. Best Web AR Examples for 2020 | Augmented Reality Marketing. [Online]

Available at: https://www.youtube.com/watch?v=AUgN2XjgwO0

[Accessed 11 November 2023].

Gillis, A. S., 1999. Augmented Reality (AR). [Online]

Available at: https://www.techtarget.com/whatis/definition/augmented-reality-AR

[Accessed 11 November 2023].

Holly, R., 2017. The best places to use AR+ mode in Pokemon Go. [Online]

Available at: https://www.imore.com/best-places-use-ar-mode-pokemon-go

[Accessed 11 November 2023].

Lambert, L., 2018. Bookful Is a New Reading App That Embraces AR and a Love of Books. [Online]

Available at: https://www.readbrightly.com/bookful-reading-app/

[Accessed 12 November 2023].

studiousguy.com, 2023. 11 Examples of Augmneted Reality in Everyday Life. [Online]

Available at: https://studiousguy.com/examples-augmented-reality/#11_Neurosurgery

[Accessed 11 November 2023].